Modern enterprises are grappling with a challenge: Over years of business, they have accumulated vast amounts of data. However, many struggle to make this data accessible and useful for streamlining operations and sparking innovation. The rapid emergence of powerful Large Language Models (LLMs) makes it possible to extract value from this existing, but often disorganized data. Well-designed AI systems excel at extracting insights from diverse, imperfect data sets, turning meticulous organization from a rigid foundational requirement into an ongoing improvement process.

Successful enterprises recognize that data only becomes valuable when transformed into actionable insights. By leveraging Retrieval-Augmented Generation (RAG) architectures, LLMs can intelligently retrieve relevant information from vast internal repositories based on the intent behind each query, rather than simply matching keywords. This approach not only reduces the immediate need for extensive data standardization but can also provide a feedback loop, enhancing information retrieval for people and AI systems alike.

Building on this foundation, let’s dive into some key considerations – from data accuracy and infrastructure challenges, to the critical role of human oversight and transparency.

Data Organization vs. Data Accuracy

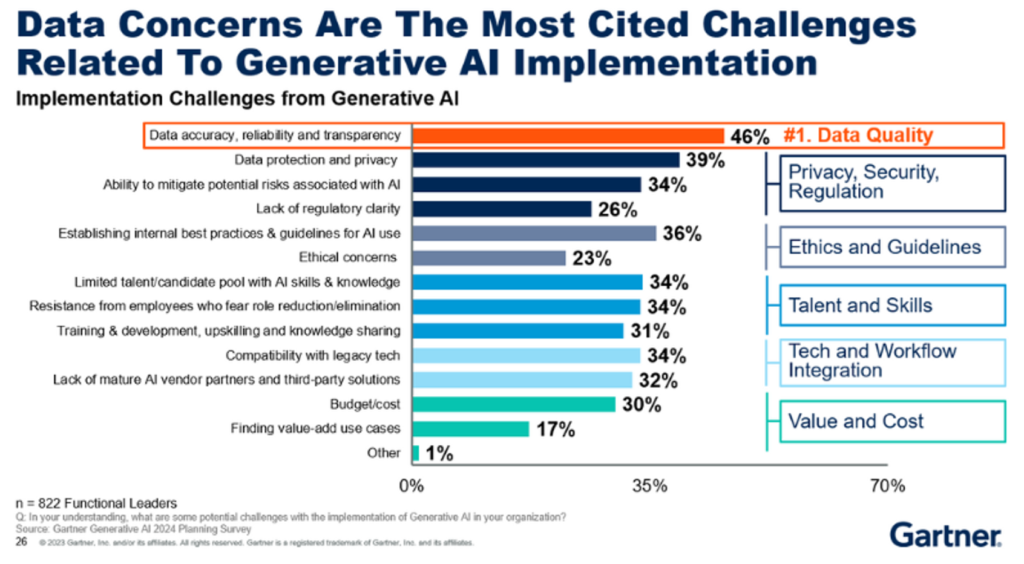

While modern AI initiatives no longer demand perfectly structured data, the quality of that data remains important. LLMs and RAG architectures are remarkably adept at extracting value from varied sources, but they depend on the underlying information being correct – as the adage goes, “garbage in, garbage out.” Inaccurate or outdated data can derail even the most sophisticated models. Of course, this problem isn’t unique to AI; this is a problem we struggle with as humans too. To that end, we recommend enterprises prioritize maintaining high data quality through human reviews and continuous feedback loops. Additionally, advanced post-RAG review techniques can be implemented, allowing LLMs to flag conflicting information they encounter for additional human review. During their 2024 Data & Analytics Summit, Gartner shared a prediction that, “Through 2026, GenAI will reduce manually intensive data management costs up to 20% each year while enabling four times as many new use cases.” This forward-looking projection underscores that generative AI should ultimately reduce the burden of data management, rather than setting an unrealistic bar for organization.

Challenges to Consider

As organizations pursue generative AI projects, there are several hurdles that extend beyond the mechanics of data retrieval. Addressing these challenges early on lays a strong foundation for effective AI adoption.

Infrastructure and Capital Constraints

Generative AI is transformative, but its promise comes with significant infrastructure demands. High-performance GPUs to power these models are both expensive and often in short supply. The AI arms race had led to some of the largest capital investments in tech in history, with Amazon, Microsoft, and Google even inking deals for nuclear power plants to power their AI investments. For most organizations, the return on investment for training, maintaining, and running their own proprietary LLMs simply does not exist. Instead, many companies leverage the on-demand capabilities of major cloud providers. This pay-as-you-go model aligns spending with actual usage and provides the agility to keep pace with rapid technological advances.

Security and Compliance

Integrating AI into enterprise workflows introduces critical considerations around data security and regulatory compliance. As an example, technologies like Data Loss Prevention (DLP) can be applied to both user queries and the underlying data sets. Should a business need to keep strict data boundaries, these technologies can even be embedded directly into the RAG pipeline, ensuring that sensitive information is filtered or masked before it ever reaches the AI model. Additionally, comprehensive observability and auditing mechanisms ensure transparency and accountability – often complemented by leveraging existing cloud provider agreements to secure data and prevent intellectual property from being used for model training.

Generative AI Without Perfect Data: A Practical Guide to Fast-Tracking Business Value

Unlock business value from generative AI without perfect data. Learn practical strategies to start small, scale fast, and build secure, high-impact use cases.

AI as an Assistant, Not the Final Decision Maker

Even the most advanced AI systems are best regarded as strategic partners rather than autonomous decision makers. While these models excel at processing complex data sets and surfacing actionable insights, human judgment remains essential when the stakes are high. In critical scenarios and regulated industries, a human-in-the-loop approach ensures that ethical considerations, contextual details, and nuanced decision-making are factored into the final outcome. While AI has earned greater autonomy as its reliability improves, for now, human oversight remains the gold standard for business-critical applications.

The Importance of Citations and Feedback

Trust is fundamental when deploying AI at an enterprise scale, and transparent citations are a powerful way to foster that trust. When an AI model references internal data, providing clear links to the original sources allows decision makers to verify facts quickly. This “trust but verify” mindset not only reduces the risk of AI hallucinations but also offers users a pathway to deeper insights. Encouraging feedback on AI responses help refine the system over time, providing actionable data to fuel continuous improvement. At scale, this can be used for A/B testing different system prompts, new retrieval or chunking techniques, or entirely different AI models. This feedback can even provide insights into the need for additional user training and where UI/UX updates might be necessary.

The Road Ahead

Effectual’s holistic approach to cloud and AI innovation starts with a solid foundation: understanding your unique business challenges and identifying achievable solutions that provide real ROI. By aligning our enterprise capabilities and robust technical expertise, we employ a “do-with” methodology to skill up your teams. This empowers you to harness the transformative potential of generative AI without sacrificing oversight, control, or budgets.

Whether you’re just shaping your AI strategy, or are deep into implementation, let Effectual help you navigate the complexities of AI transformation and drive industry-leading innovations.